In computer architecture, where performance and efficiency are paramount, memory hierarchy design and cache management stand as pivotal pillars. These concepts govern the intricate dance between processing speed and data accessibility, shaping the landscape of modern computing systems. This article delves into the intricacies of memory hierarchy design and cache management, shedding light on their significance, challenges, and strategies employed to strike a delicate balance between computational power and resource utilization.

What is Memory Hierarchy?

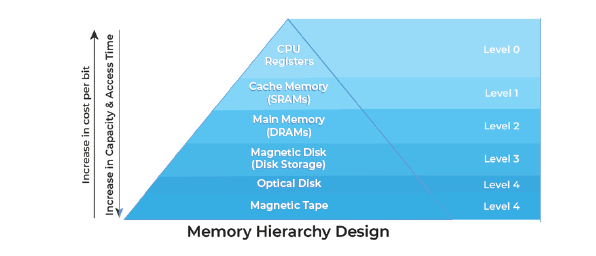

At the heart of memory hierarchy design lies the fundamental principle of exploiting the trade-off between speed and capacity. A computer’s memory hierarchy encompasses multiple levels, each optimized to serve a specific purpose. The hierarchy typically consists of registers, caches, main memory (RAM), and secondary storage (hard drives, SSDs). The proximity of data to the processor determines the speed of access, with registers being the fastest but limited in capacity.

Types of Memory Hierarchy

Memory hierarchy is a crucial aspect of computer architecture that involves organizing different levels of memory to balance performance, capacity, and cost. The memory hierarchy typically consists of several levels, each with varying speed, capacity, and cost characteristics. Here are the common types of memory hierarchy levels:

1. Registers: At the highest and fastest level of the memory hierarchy are registers. These are small, high-speed storage units located within the processor itself. Registers hold data that the CPU is currently processing. Due to their proximity to the processor, registers offer the fastest access times but have limited capacity.

2. L1 Cache: The Level 1 cache, often referred to as L1 cache, is a small but ultra-fast memory located within or very close to the CPU core. It stores frequently accessed data and instructions, reducing the time it takes for the CPU to fetch information from main memory. L1 cache is divided into separate instruction and data caches.

3. L2 Cache: The Level 2 cache, or L2 cache, is a larger but slightly slower memory that is also located near the CPU. It serves as a secondary cache and helps reduce the number of cache misses from the L1 cache. L2 cache is shared among multiple cores in some multi-core processors.

4. L3 Cache: In multi-core processors, there may be a Level 3 cache, or L3 cache, which is larger but slower than the L2 cache. The L3 cache is shared among all cores and provides a buffer between the processor cores and the main memory.

5. Main Memory (RAM): Main memory, often referred to as RAM (Random Access Memory), is the primary volatile memory in a computer. It stores the data and instructions that the CPU is actively using. While main memory has a higher capacity than caches, it also has a higher latency and lower speed.

6. Solid-State Drives (SSDs): SSDs are non-volatile storage devices that provide a larger capacity than main memory and are used for storing both data and software. They offer faster access times compared to traditional hard drives (HDDs), but are slower than cache or main memory.

7. Hard Disk Drives (HDDs): HDDs are traditional mechanical storage devices with slower access times compared to SSDs. They provide higher capacity and are often used for long-term storage of data and applications.

8. Optical Drives: Optical drives, such as CD/DVD/Blu-ray drives, offer a slower but inexpensive option for storing and reading data.

9. Tertiary Storage: Tertiary storage includes external storage devices such as network-attached storage (NAS) and cloud storage. While they offer large capacities, they have higher latency and are accessed over networks.

Each level of the memory hierarchy serves a specific purpose and strikes a balance between access speed, capacity, and cost. The goal of the memory hierarchy is to ensure that the CPU can efficiently access the required data while optimizing overall system performance.

System Supported Memory Standards in Memory Hierarchy

The list of system supported memory standards according to memory hierarchy is as follows

| Level | 1 | 2 | 3 | 4 |

|---|---|---|---|---|

| Name | Register | Cache | Main Memory | Secondary Memory |

| Size | <1 KB | less than 16 MB | <16GB | >100 GB |

| Implementation | Multi-ports | On-chip/SRAM | DRAM (capacitor memory) | Magnetic |

| Access Time | 0.25ns to 0.5ns | 0.5 to 25ns | 80ns to 250ns | 50 lakh ns |

| Bandwidth | 20000 to 1 lakh MB | 5000 to 15000 | 1000 to 5000 | 20 to 150 |

| Managed by | Compiler | Hardware | Operating System | Operating System |

| Backing Mechanism | From cache | from Main Memory | from Secondary Memory | from ie |

Understanding Cache Management

Cache memory acts as a bridge between the processor and main memory, alleviating the performance bottleneck caused by the speed disparity between the two. Cache management is the art of optimizing cache utilization to ensure that frequently accessed data is readily available, reducing the need to fetch data from slower main memory.

Strategies for Efficient Cache Management

1. Cache Mapping: Cache mapping techniques determine how data is placed in cache lines. Common methods include direct-mapped, set-associative, and fully-associative mapping. Each has its trade-offs in terms of efficiency and complexity.

2. Replacement Policies: When cache space is limited, decisions must be made about which data to evict to make room for new data. Replacement policies like Least Recently Used (LRU), Random, and Least Frequently Used (LFU) dictate which cache line gets replaced.

3. Write Policies: Write policies govern how write operations are handled in the cache. Write-through policies immediately update both cache and main memory, ensuring data consistency but potentially slowing down write-intensive tasks. Write-back policies update the cache and defer main memory updates until necessary, optimizing write performance.

4. Cache Coherency: In multiprocessor systems, cache coherency ensures that multiple caches containing copies of the same data remain consistent. Protocols like MESI (Modified, Exclusive, Shared, Invalid) manage cache coherency to prevent data inconsistencies and conflicts.

Challenges and Evolving Solutions

Memory hierarchy design and cache management are not without challenges. The growing gap between processor speeds and memory latency exacerbates the "memory wall" problem. Complex workloads and data dependencies can lead to cache thrashing, where cache space is inefficiently utilized. Moreover, cache management becomes intricate in heterogeneous systems, where different processing units have varying memory access patterns.

To address these challenges, modern processors incorporate sophisticated hardware and software techniques. Advanced prefetching algorithms predict and load future data into cache, reducing cache misses. Adaptive replacement policies dynamically adjust based on access patterns. Research into non-volatile memory technologies like phase-change memory (PCM) offers the potential to bridge the gap between memory and storage, revolutionizing memory hierarchy design.

Parallel processing is a computing paradigm that involves breaking down a computational task into smaller subtasks and executing them simultaneously

Conclusion

Memory hierarchy design and cache management are the bedrock of efficient and high-performance computing systems. By orchestrating the interplay between different memory levels and optimizing cache utilization, computer architects harness the full potential of processors while minimizing latency and resource waste. As technology continues to advance, the art of memory hierarchy design and cache management evolves, ensuring that the delicate equilibrium between performance and efficiency remains at the forefront of computing innovation.

Frequently Asked Questions (FAQs)

Here are some of the frequently asked questions about memory hierarchy.

1: What is the purpose of the memory hierarchy in computer systems?

Answer: The memory hierarchy in computer systems serves to balance the trade-off between speed, capacity, and cost. By organizing different levels of memory, from registers to caches to main memory and storage, the memory hierarchy ensures that data is accessible quickly by the CPU while optimizing overall system performance and resource utilization.

2: How does cache memory improve processor performance?

Answer: Cache memory, such as L1, L2, and L3 caches, improves processor performance by storing frequently accessed data and instructions close to the CPU. This reduces the time it takes for the CPU to retrieve data from slower main memory. Cache memory helps minimize the impact of memory latency, speeding up instruction execution and data manipulation.

3: What is the difference between RAM and cache memory?

Answer: RAM (Random Access Memory) and cache memory serve different roles in the memory hierarchy. Cache memory is faster but smaller and more expensive, storing frequently used data for quick access by the CPU. RAM is larger and slower than cache, serving as the main working memory where active data and programs are stored during execution.

4: How do different levels of cache work together in multi-core processors?

Answer: In multi-core processors, each core typically has its own L1 and L2 caches. These caches store data specific to the core’s operations. The L3 cache, which is shared among all cores, acts as a larger buffer that stores data shared between cores. This arrangement optimizes data sharing and reduces cache contention among cores, enhancing overall system performance.

5: What is the role of solid-state drives (SSDs) in the memory hierarchy?

Answer: Solid-state drives (SSDs) play a role in the memory hierarchy by providing non-volatile storage that sits between main memory and traditional hard disk drives (HDDs). While slower than cache and main memory, SSDs offer faster access times and higher data transfer rates compared to HDDs. SSDs are used for storing data and applications, striking a balance between speed and capacity in storage solutions.